August 1, 2010

How Do You Lose Weight? Which Diet Is The Best?

There are a lot of studies about diets and even more studies about weight loss drugs. So why can't we decide which is the best diet?

There's a stack of studies a meter high. "Look," they say, "the evidence is pretty strong that they are all the same." Really? That seems intuitively wrong-- shouldn't some diets be better for certain people? And yet, there's the stack.

This post won't tell you which is the best diet. You can go back to Oprah.com now.

This post is about why the studies can't tell you this.

I.

Most clinical trials have high dropout rates. What do you do with them?

1. You could use only the data of people who finish the trial, so you can say, "it had a 100% cure rate for the 13 people that didn't explode."

2. You could leave the last recorded score intact, and just carry it to the end.

Clinical trials have usually employed #2: LOCF: the last observation carried forward.

Researchers regularly remind us that this type of analysis can potentially underestimate the effect of a treatment. If a patient drops out because of side effects after one day his score will remain "sick", bringing the overall average down. Perhaps had he stayed on the medicine, he may have been cured.

Example: consider N=2. One person gets cured, the other person dropped out on day 1 (no improvement.) So the study found that Drug A gets you 50% improvement. Unhelpful.

II.

Here is an unrealistic example for the purposes of illustration. Take a bunch of 300 lb individuals. I give half a 100 calorie diet, and the other half a 5000 calorie diet. Read it again. Which will result in more weight loss?

After one day, most of the 100 calorie diet group drop out-- "this sucks"-- and so their last weight carried forward is 300 lbs. Average weight loss at the end= 0 lbs. If the 5000 calorie people can stick to the donut-ham-hamburger diet and lose even a single pound, the study would conclude that the 5000 calorie diet resulted in more weight loss.

The study isn't useless, because it tells you something very important: across a population, the 100 calorie diet is going to fail-- most people like dessert.

But that doesn't tell you what to do. This study does not help with that decision at all. In fact, it may confuse you because now you are confronted with the evidence that the 5000 calorie diet is better, or at least not worse. Bacon up.

Studies can be further misapplied when they (read: media) overgeneralize the results. Are all obese people obese by the same mechanism?

Point: in an LOCF study, dropouts don't just minimize the importance of the study, they ruin the study if all you are doing is looking at the primary outcome. Point 2: you can't take the results of an LOCF study and simply apply it to an individual in front of you. You have to consider the contex. "Most of the people who dropped out did so because of X. Is this likely for my current patient?"

III.

Now consider an FDA trial of an appetite suppressant.

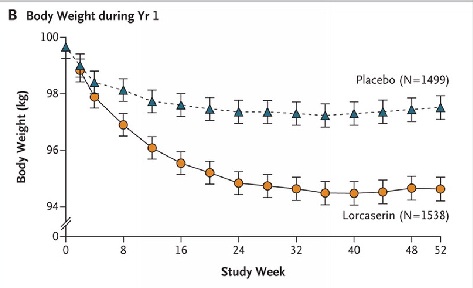

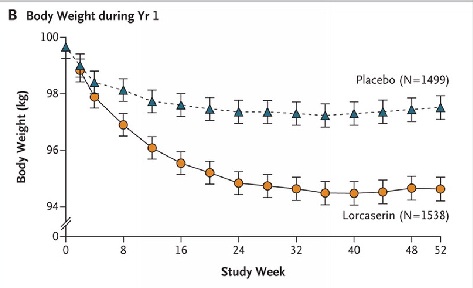

Lorcaserin is a new 5HT2C agonist (think opposite of Remeron) that theoretically promotes satiety and/or suppresses appetite. What happens when you give it vs. placebo to a bunch of 100kg people who are told to exercise and eat 600 less calories a day, for a year?

You see that the placebo group lost an average 2.2 kg, which was 2% of their baseline body weight, while the lorcaserin people lost about 5.8 kg, which is 5.8% of their body weight.

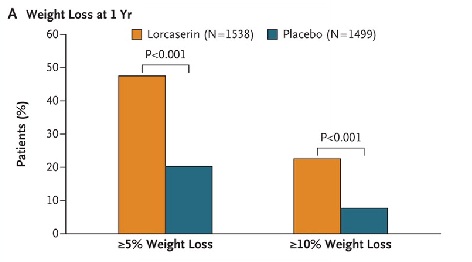

Is that 3.5 kg difference meaningful? No: according to the FDA, you have to beat placebo by 5% or you don't get FDA approval. To the FDA, this failed a primary efficacy measure.

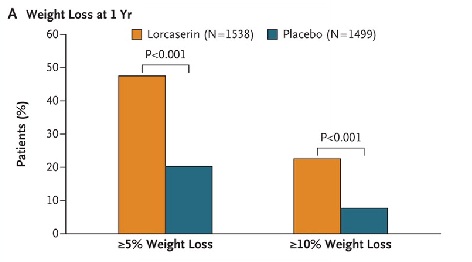

However, in theory, the drug should work in certain kinds of people-- maybe those whose obesity is a function of hunger? How many people were able to lose significant weight, like 10kg? Answer: three times more than with dieting alone.

Is this a drug you'd be willing to try? There is a group of people for whom the drug might be awesome-- if you could predict who those people were.

The study becomes difficult to interpret because 50% of the people dropped out. When did they drop out? Doesn't say. But if 25% of them had dropped out by the fourth month (4kg or less weight loss)-- let alone earlier-- the rest of the people would have had to have lost about 7kg in order to generate an overall average of 5.8kg of loss-- and those guys would have met the required 5% superiority required by the FDA.

I'm not saying that happened (or didn't happen.) I'm saying that for the purposes of practicing medicine, you cannot say "studies show this drug works/fails" without an understanding of why it worked/failed.

IV.

Now take the Atkins diet: is it better than conventional "low fat" diets? Let's ask the gated community socialists at the New England Journal of Mendacity:

(from N Engl J Med 2003; 348:2082-2090)

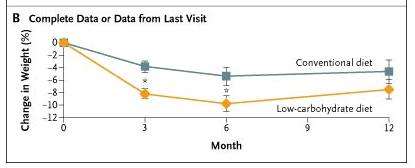

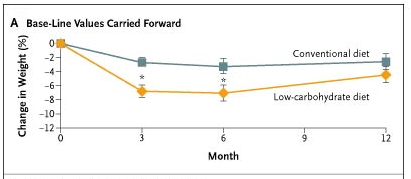

You can see that at 6 months, the Atkins diet people lost more weight.

But, it's LOCF: by month 3, 30% of the conventional diet people bailed, vs. only 15% of the Atkins. If you assume that very little weight loss went on in the first three months, then the weights for the conventional diet will appear heavier than they could have been, dragged "up" by the dropouts who lost no weight because they didn't stick to it.

We won't know what could have happened if all of those conventional dieters stuck to the plan. This isn't to say Atkins didn't work; it is to say that it may not have been better.

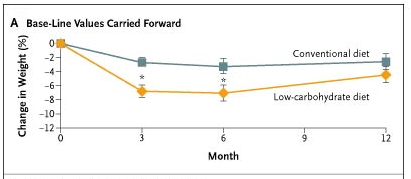

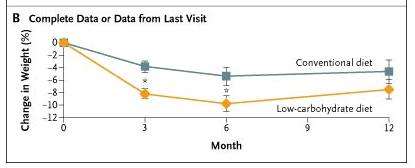

Analyze the data a different way. Instead of using the morally weak quitter's last score to carry forward, the authors reverted to their baseline score (e.g. no weight loss), no matter how much weight they had lost in their brief time in the study.

If you take the curve using this analysis (B above) and compare to the LOCF (A below), then there are three possibilities:

If they initially lost a lot of weight, then this analysis would "artificially" worsen the curve (i.e. make it appear like there was no weight loss.) A curve would be higher than B curve.

If they had magically gained weight before dropping out, then this analysis would hide that fact and the B curve would appear lower.

If my assumption is correct-- that they didn't lose much weight in those early months, then the curves should be the same.

Note that for the conventional diet, the curves are almost the same: they didn't lose much weight, and they dropped out. The effect at month 6, therefore, is to make the overall weight loss of the conventional group appear less.

Note that for the conventional diet, the curves are almost the same: they didn't lose much weight, and they dropped out. The effect at month 6, therefore, is to make the overall weight loss of the conventional group appear less.

In other words, conventional diets may not be as good; or they may better. The same can be said about Atkins, which is to say, nothing can be said at all.

The point here is about the studies showing Atkins is superior: they really mean only that more people stick to it.

V.

Now to the meat of the issue. What about all the studies that show that the diets are the same? Surely those aren't flawed?

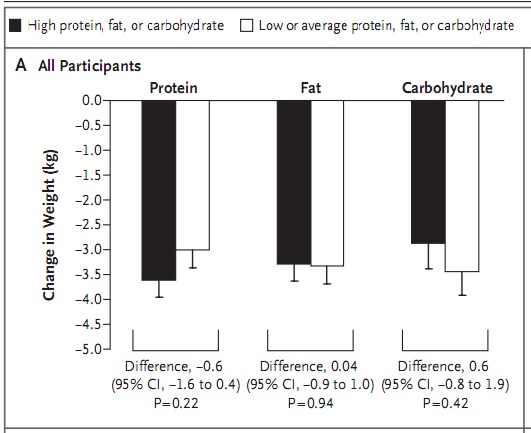

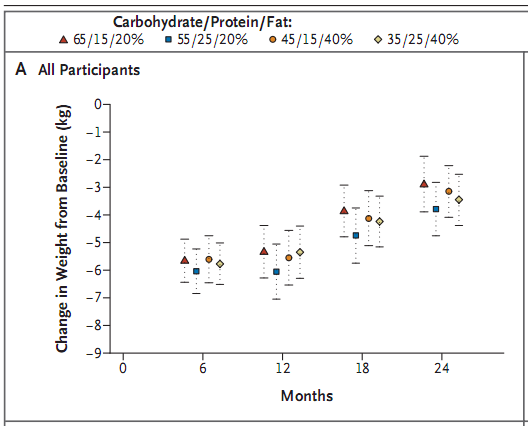

Let's find out if the percentage of fats, carbs, and protein matter for weight loss. Let's pull a major study from the stack, something from the NEJM:

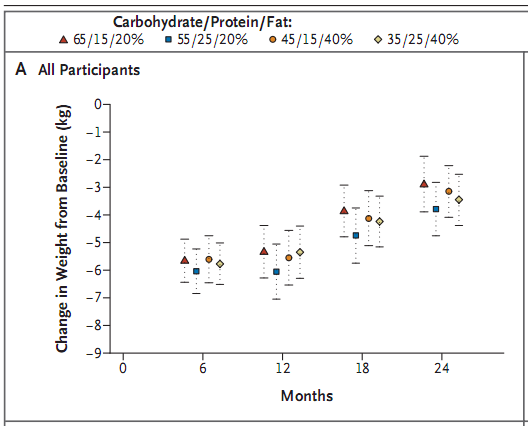

800 people put on various diets: high/low protein, high/low fat, and a range of carbs, e.g.,

20% fat, 15% protein, 65% carb

20% fat, 25% protein, 55% carb

40% fat, 15% protein, 45% carb

40% fat, 25% protein, 35% carb

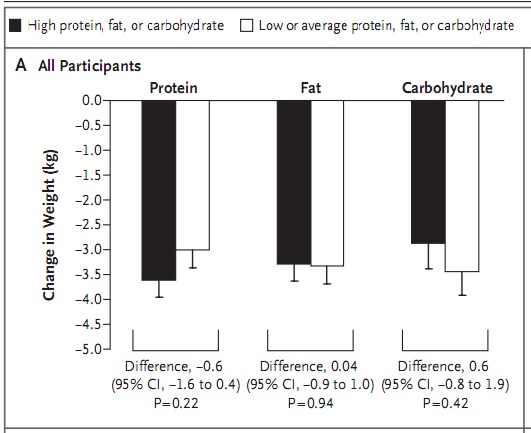

Check back at the midterm elections. Which was the best?

From the Discussion:

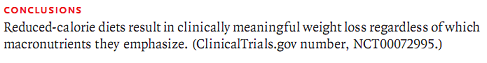

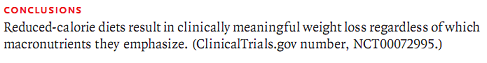

Or, from the abstract:

All diets resulted in the same weight loss! This proves it! Oh, wait, this was published in NEJM, where peer review= spell check. Better look more closely.

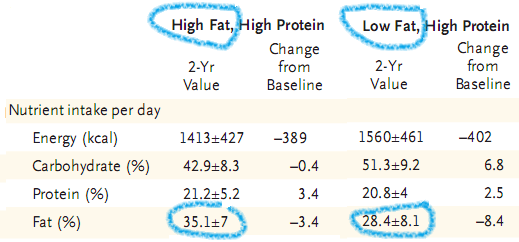

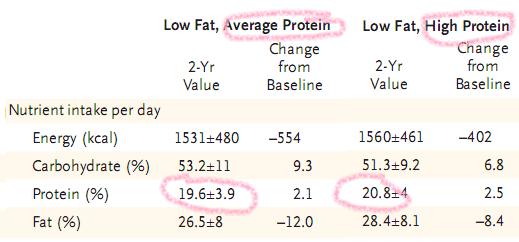

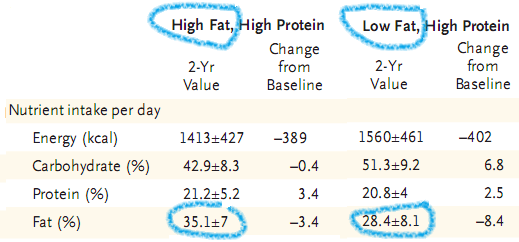

Though patients were told to eat a high fat (40%) vs. low fat (20%) diet, using a fixed protein amount, here's what they actually ate:

That difference of 20% has been reduced to a difference of 7%, i.e. what should have been a difference of 33g of fat is now a difference of 11g.

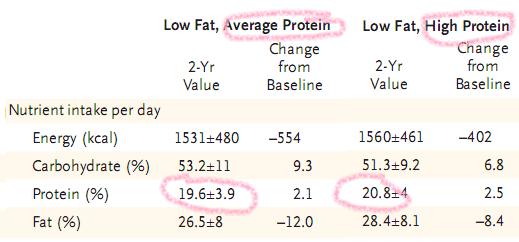

What about high (25%) vs. low (15%) protein diets?

That 15% vs. 25% difference in average vs. high protein diets has been reduced to no difference whatsoever. In fact, these people all managed to eat 20% protein no matter what diet they were supposed to be on.

So this study did not test various diets against one another; it tested essentially the same diet four times. And it found that pretending to be on a high/low protein/fat diet has very little effect on the outcome, which if written that way would never made it into Children's Highlights, let alone NEJM.

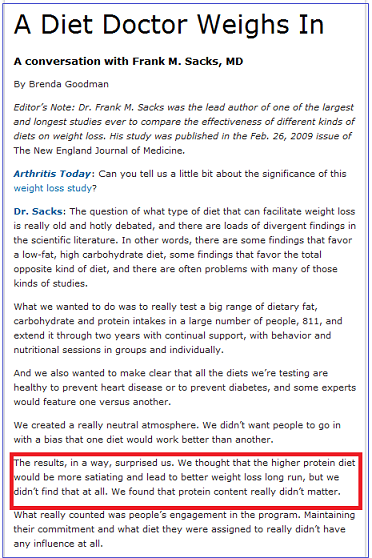

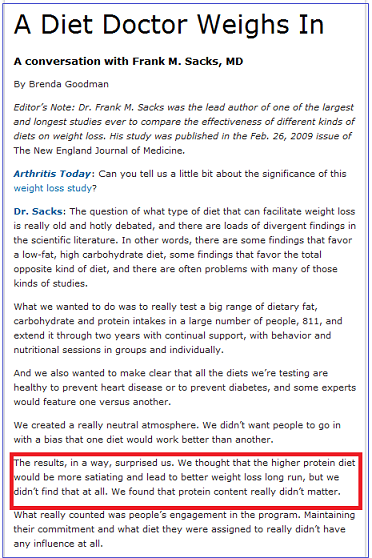

Strangely, that's not the finding reported in the media-- or even by the lead author himself:

How could it matter if it wasn't actually different?

How could it matter if it wasn't actually different?

VI.

So what do these studies stacked a meter tall tell us? That lorcaserin doesn't work (except when it does work awesomely); conventional diets suck (except in those who stick to them); Atkins diets may work or suck, and most people give up after Labor Day anyway, just in time for the season premiere of The Bachelorette. (FYI: She's on Pinot and apples diet.)

If you were hoping the effect size or p values were going to guide you would have been lead astray. Those p values aren't telling you anything useful, they are at best confusing and at worst misleading. A glance at the methodology has more practical value than the little asterisk above a score at month 6.

Look at that stack of studies a meter high. I've just fed them to a goat. Tasty. Can you use them to say whether you should take lorcaserin? Whether Atkins was better than conventional diet? Can you use them to guide your decision about whether you should eat more bacon or more ice cream? Nope. And they will never be able to, because the purpose of these studies is not to determine the answer, the purpose of these studies is to be published, truth be damned.

So I'm sticking to bacon. And getting my sugar from you know what.

----

http://twitter.com/thelastpsych

There's a stack of studies a meter high. "Look," they say, "the evidence is pretty strong that they are all the same." Really? That seems intuitively wrong-- shouldn't some diets be better for certain people? And yet, there's the stack.

This post won't tell you which is the best diet. You can go back to Oprah.com now.

This post is about why the studies can't tell you this.

I.

Most clinical trials have high dropout rates. What do you do with them?

1. You could use only the data of people who finish the trial, so you can say, "it had a 100% cure rate for the 13 people that didn't explode."

2. You could leave the last recorded score intact, and just carry it to the end.

Clinical trials have usually employed #2: LOCF: the last observation carried forward.

Researchers regularly remind us that this type of analysis can potentially underestimate the effect of a treatment. If a patient drops out because of side effects after one day his score will remain "sick", bringing the overall average down. Perhaps had he stayed on the medicine, he may have been cured.

Example: consider N=2. One person gets cured, the other person dropped out on day 1 (no improvement.) So the study found that Drug A gets you 50% improvement. Unhelpful.

II.

Here is an unrealistic example for the purposes of illustration. Take a bunch of 300 lb individuals. I give half a 100 calorie diet, and the other half a 5000 calorie diet. Read it again. Which will result in more weight loss?

After one day, most of the 100 calorie diet group drop out-- "this sucks"-- and so their last weight carried forward is 300 lbs. Average weight loss at the end= 0 lbs. If the 5000 calorie people can stick to the donut-ham-hamburger diet and lose even a single pound, the study would conclude that the 5000 calorie diet resulted in more weight loss.

The study isn't useless, because it tells you something very important: across a population, the 100 calorie diet is going to fail-- most people like dessert.

But that doesn't tell you what to do. This study does not help with that decision at all. In fact, it may confuse you because now you are confronted with the evidence that the 5000 calorie diet is better, or at least not worse. Bacon up.

Studies can be further misapplied when they (read: media) overgeneralize the results. Are all obese people obese by the same mechanism?

Point: in an LOCF study, dropouts don't just minimize the importance of the study, they ruin the study if all you are doing is looking at the primary outcome. Point 2: you can't take the results of an LOCF study and simply apply it to an individual in front of you. You have to consider the contex. "Most of the people who dropped out did so because of X. Is this likely for my current patient?"

III.

Now consider an FDA trial of an appetite suppressant.

Lorcaserin is a new 5HT2C agonist (think opposite of Remeron) that theoretically promotes satiety and/or suppresses appetite. What happens when you give it vs. placebo to a bunch of 100kg people who are told to exercise and eat 600 less calories a day, for a year?

You see that the placebo group lost an average 2.2 kg, which was 2% of their baseline body weight, while the lorcaserin people lost about 5.8 kg, which is 5.8% of their body weight.

Is that 3.5 kg difference meaningful? No: according to the FDA, you have to beat placebo by 5% or you don't get FDA approval. To the FDA, this failed a primary efficacy measure.

However, in theory, the drug should work in certain kinds of people-- maybe those whose obesity is a function of hunger? How many people were able to lose significant weight, like 10kg? Answer: three times more than with dieting alone.

Is this a drug you'd be willing to try? There is a group of people for whom the drug might be awesome-- if you could predict who those people were.

The study becomes difficult to interpret because 50% of the people dropped out. When did they drop out? Doesn't say. But if 25% of them had dropped out by the fourth month (4kg or less weight loss)-- let alone earlier-- the rest of the people would have had to have lost about 7kg in order to generate an overall average of 5.8kg of loss-- and those guys would have met the required 5% superiority required by the FDA.

I'm not saying that happened (or didn't happen.) I'm saying that for the purposes of practicing medicine, you cannot say "studies show this drug works/fails" without an understanding of why it worked/failed.

IV.

Now take the Atkins diet: is it better than conventional "low fat" diets? Let's ask the gated community socialists at the New England Journal of Mendacity:

(from N Engl J Med 2003; 348:2082-2090)

You can see that at 6 months, the Atkins diet people lost more weight.

But, it's LOCF: by month 3, 30% of the conventional diet people bailed, vs. only 15% of the Atkins. If you assume that very little weight loss went on in the first three months, then the weights for the conventional diet will appear heavier than they could have been, dragged "up" by the dropouts who lost no weight because they didn't stick to it.

We won't know what could have happened if all of those conventional dieters stuck to the plan. This isn't to say Atkins didn't work; it is to say that it may not have been better.

Analyze the data a different way. Instead of using the morally weak quitter's last score to carry forward, the authors reverted to their baseline score (e.g. no weight loss), no matter how much weight they had lost in their brief time in the study.

If you take the curve using this analysis (B above) and compare to the LOCF (A below), then there are three possibilities:

If they initially lost a lot of weight, then this analysis would "artificially" worsen the curve (i.e. make it appear like there was no weight loss.) A curve would be higher than B curve.

If they had magically gained weight before dropping out, then this analysis would hide that fact and the B curve would appear lower.

If my assumption is correct-- that they didn't lose much weight in those early months, then the curves should be the same.

Note that for the conventional diet, the curves are almost the same: they didn't lose much weight, and they dropped out. The effect at month 6, therefore, is to make the overall weight loss of the conventional group appear less.

Note that for the conventional diet, the curves are almost the same: they didn't lose much weight, and they dropped out. The effect at month 6, therefore, is to make the overall weight loss of the conventional group appear less. In other words, conventional diets may not be as good; or they may better. The same can be said about Atkins, which is to say, nothing can be said at all.

The point here is about the studies showing Atkins is superior: they really mean only that more people stick to it.

V.

Now to the meat of the issue. What about all the studies that show that the diets are the same? Surely those aren't flawed?

Let's find out if the percentage of fats, carbs, and protein matter for weight loss. Let's pull a major study from the stack, something from the NEJM:

800 people put on various diets: high/low protein, high/low fat, and a range of carbs, e.g.,

20% fat, 15% protein, 65% carb

20% fat, 25% protein, 55% carb

40% fat, 15% protein, 45% carb

40% fat, 25% protein, 35% carb

Check back at the midterm elections. Which was the best?

From the Discussion:

Discussion

In this population-based trial, participants were assigned to and taught about diets that emphasized different contents of carbohydrates, fat, and protein and were given reinforcement for 2 years through group and individual sessions. The principal finding is that the diets were equally successful in promoting clinically meaningful weight loss and the maintenance of weight loss over the course of 2 years. Satiety, hunger, satisfaction with the diet, and attendance at group sessions were similar for all diets.

Or, from the abstract:

All diets resulted in the same weight loss! This proves it! Oh, wait, this was published in NEJM, where peer review= spell check. Better look more closely.

Though patients were told to eat a high fat (40%) vs. low fat (20%) diet, using a fixed protein amount, here's what they actually ate:

That difference of 20% has been reduced to a difference of 7%, i.e. what should have been a difference of 33g of fat is now a difference of 11g.

What about high (25%) vs. low (15%) protein diets?

That 15% vs. 25% difference in average vs. high protein diets has been reduced to no difference whatsoever. In fact, these people all managed to eat 20% protein no matter what diet they were supposed to be on.

So this study did not test various diets against one another; it tested essentially the same diet four times. And it found that pretending to be on a high/low protein/fat diet has very little effect on the outcome, which if written that way would never made it into Children's Highlights, let alone NEJM.

Strangely, that's not the finding reported in the media-- or even by the lead author himself:

How could it matter if it wasn't actually different?

How could it matter if it wasn't actually different?VI.

So what do these studies stacked a meter tall tell us? That lorcaserin doesn't work (except when it does work awesomely); conventional diets suck (except in those who stick to them); Atkins diets may work or suck, and most people give up after Labor Day anyway, just in time for the season premiere of The Bachelorette. (FYI: She's on Pinot and apples diet.)

If you were hoping the effect size or p values were going to guide you would have been lead astray. Those p values aren't telling you anything useful, they are at best confusing and at worst misleading. A glance at the methodology has more practical value than the little asterisk above a score at month 6.

Look at that stack of studies a meter high. I've just fed them to a goat. Tasty. Can you use them to say whether you should take lorcaserin? Whether Atkins was better than conventional diet? Can you use them to guide your decision about whether you should eat more bacon or more ice cream? Nope. And they will never be able to, because the purpose of these studies is not to determine the answer, the purpose of these studies is to be published, truth be damned.

So I'm sticking to bacon. And getting my sugar from you know what.

----

http://twitter.com/thelastpsych

39 Comments