WRONG

What Happens To Fake Studies?

Continue reading:

"What Happens To Fake Studies?" ››

Score: 2 (2 votes cast)

Score: 2 (2 votes cast)

Christopher Columbus Was Wrong

Continue reading:

"Christopher Columbus Was Wrong" ››

Score: 4 (4 votes cast)

Score: 4 (4 votes cast)

The Media Is The Message, And The Message Is You're An Idiot

You mean I get to pick?

Continue reading:

"The Media Is The Message, And The Message Is You're An Idiot" ››

Score: 3 (3 votes cast)

Score: 3 (3 votes cast)

Either Conservatives Are Cowards Or Liberals Are...

A news story, talked about ad nauseam, concerning a study in Science that no one will bother to read.

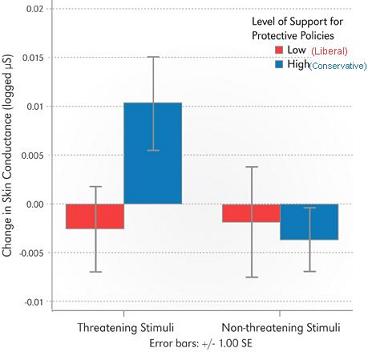

Subjects-- liberals and conservatives-- are shown random pictures of scary stuff (spider on a person's face) interspersed between photos of neutral stuff (bunnies.) Conservatives exhibit much more fear (e.g. startle response, skin response) than liberals.

In case the political implications of this study are not obvious, these are the titles of the news reports about the study:

- Science News: The Politics of Fear

- Slate: Republicans Are From Mars, Liberals Are From Venus

- Scientific American: Are you more likely to be politically left or right if you scare easily?

- Freakonomics blog: Don't scream, you'll give your ideals away

Etc. The message is clear: conservatives get scared more easily than liberals.

Right? That's what the titles say-- I'm not off base here, right? There's no other possible way to interpret them?

II.

The methodology is fine-- but the interpretation is so demonstrably flawed that they are actually interpreting the results backwards.

Here's the most important line of all-- found only in the SA article-- in the second to last paragraph, of course:

People who leaned more politically left didn't respond any differently to those [scary] images than they did to pictures of a bowl of fruit, a rabbit or a happy child.

Really? Spider on face vs. happy child? No difference?

That extra bit of info doesn't even appear in the Science News story-- or anywhere else, for that matter.

The graph shows that liberals and conservatives have a trivial skin response to neutral pictures, and liberals show no difference in response when confronted with a scary photo.

So the actual finding isn't that conservatives are fearful; it's that liberals seem not to exhibit much response to scary photos.

III.

But it's actually a little worse than that.

The typical use for such tests of startle and fear aren't to see how scared people are, they are used specifically to find out how scared people aren't. For example, they are used to evaluate psychopathy, and the results are the same as here-- psychopaths have decreased responses, compared to normal people, to aversive photos.

So which is it? Are conservatives fearful, or are liberals psychopaths?

I'm not picking sides in the debate, but I am pointing out how this study missed the actual result-- liberals are less fearful than would be expected-- and then the study was publicized in the media with an entirely backwards inference, that conservatives scare easily.

But it sounds like science, conducted by scientists; it's published in Science, and then publicized in Scientific American. It must be true.

(more)

Continue reading:

"Either Conservatives Are Cowards Or Liberals Are..." ››

Score: 8 (10 votes cast)

Score: 8 (10 votes cast)

Advancing Paternal Age And Bipolar Disorder

There is considerable evidence that advanced paternal age raises the risk of autism. It appears that the same is true in schizophrenia.

Bipolar disorder, however, is an entirely different matter.

Continue reading:

"Advancing Paternal Age And Bipolar Disorder" ››

Score: 2 (2 votes cast)

Score: 2 (2 votes cast)

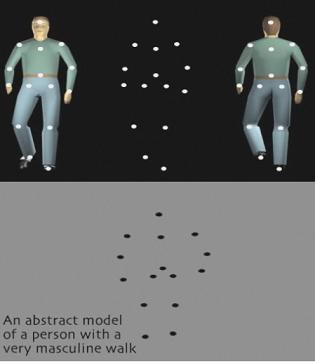

Odd Finding of Gender Differences In Walking

A story about a study which claims to find a relationship between the perception of gender-- are the walking dots men or women-- and the direction those dots are going-- towards you or away.

Continue reading:

"Odd Finding of Gender Differences In Walking" ››

Score: 0 (0 votes cast)

Score: 0 (0 votes cast)

Abusive Teens Force Their Girlfriends To Get Pregnant! (Don't Let The Truth Get In The Way Of A Good Story)

Continue reading:

"Abusive Teens Force Their Girlfriends To Get Pregnant! (Don't Let The Truth Get In The Way Of A Good Story)" ››

Score: 3 (3 votes cast)

Score: 3 (3 votes cast)

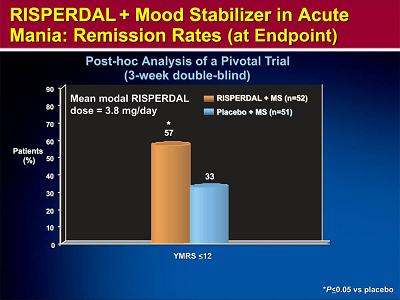

Mood Stabilizer + Antipsychotic for Bipolar

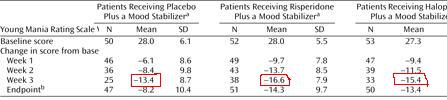

This slide-, taken from a drug company program- like many others-- shows that using a mood stabilizer + antipsychotic is better than mood stabilizer alone.

Look carefully. This is what is wrong with psychiatry.

As you can see, Risperdal + Depakote (orange) is better than Depakote alone (blue.)

This is not a finding unique to Risperdal. Every antipsychotic has virtually identical data for adjunctive treatment, which is good, because they shouldn't have different efficacies.

So given that 8 other drugs have identical findings, these data suggest that, essentially, two drugs are better than one.

That's obvious, right? That's what the picture shows? Well, here's what's wrong with psychiatry: without looking at the slide, tell me what the y-axis was. Write it here:______________

The problem in psychiatry is that no one ever looks at the y-axis. We assume that the y-axis is a good one, that whatever measure used is worthwhile. We assume that the y-axis has been vetted: by the authors of the study, by the reviewers of the article, by the editors of the journal, and by at least some of the readers. So we focus instead on statistical significance, study design, etc. Well, I'm here to tell you: don't trust that anyone has vetted at anything.

The y-axis here is "% of patients with a YMRS <12." What's a YMRS? A mania scale. But what is a 12? Is it high? Low? What's the maximum score? What counts as manic? What questions does the YMRS ask, how does it measure the answers? You don't know? Again-- we figure someone else vetted it. The YMRS is a good scale because that's what scale we use.

Forget about the YMRS-- what does "% of patients" mean? If this is an efficacy in adjunct treatment study, why not have the y-axis be just the YMRS, to show how much it went down with one or two drugs?

Because that's the trick. This y-axis doesn't say "people got more better on two drugs." It says, "more people on two drugs got better."

Pretend you have a room with 100 manics. You give them all Depakote, and 30% get better. Now add Risperdal-- another 30% get better. But that doesn't mean the Depakote responders needed Risperdal, or the Risperdal responders needed Depakote-- or the other 40% got anything out of either drug.

It could be true that the effects are additive-- but this study doesn't show that. No study using "% of patients" can speak to synergistic effects. In other words, these studies do not say, "if you don't respond to one drug, add the second." They say, "if you don't respond to one drug, switch to another drug." They don't justify polypharmacy. They require trials at monotherapy.

So you may ask, well, do two drugs lower mania better than one drug, or not?

Depending what week you look at, MS alone reduces the score by 13; two drugs gives you another 3-6 points. On an 11 question scale, rated 0-4. So no, it's not better.

I have yet to meet someone who doesn't interpret these studies as supportive of polypharmacy, and it's not because they aren't critical. The reason for the blindness is the paradigm: they think-- want-- the treatment of bipolar to be the same as the treatment of HIV, or cancer, or pregnancy. The important difference is that these other diseases are binary: once you get them, nothing you do makes it worse. Take pregnancy. If two chemicals combined lower the rate of pregnancy, it is clearly worth it to take both. One chemical might have been enough, but who wants to find out? So you take the risk, and you eat both. This is also my argument against making Plan B OTC. Bipolar is not like this: if one or two drugs in succession fail, or things get bad, you can always resort to polypharmacy later.

Again, it may be true that polypharmacy is necessary. Maybe 3-6 points are needed. Maybe two drugs gets you better faster. But it can't be the default, it can't be your opening volley. Because I can't prove two drugs are better than one, but I can prove they have twice as many side effects, and are twice as expensive.

At minimum, if polypharmacy is successfully used to break an acute episode, you should then try to reduce the dose and/or number of drugs.

Now, here's the homework question: if all these antimanics have about the same efficacy, and polypharmacy should be third or fourth line, why do we start with Depakote? Is it better? Safer? Cheaper? What are the reasons behind our practice?

Score: 4 (4 votes cast)

Score: 4 (4 votes cast)

"Because I Said So"

I have written endlessly about how language controls psychiatric thought, and that it will be impossible for psychiatry to progress while semiotics trumps science. Here is a recent example:

In the Oct 2006 JCP, there is an article about the efficacy of Depakote ER for acute mania.

As I read the introduction to this useless paper, I get kicked in the throat by this:

"Currently approved treatments of the acute manic phase of bipolar disorder can be categorized primarily as mood stabilizers (e.g. divalproex sodium, lithium, and carbamazepine) or as atypical antipsychotics (i.e. aripiprazole, olanzapine, quetiapine, risperidone, and ziprasidone.(5)"

Note carefully that the authors have taken a set of medications and artificially divided them into "mood stabilizers" OR "antipsychotics." Ok, well, wouldn't it be great if reference 5 actually justified this? Using data or logic? Well, it doesn't.

But the damage has been done. Unless you have a computer with FIOS and three monitors and are reading every reference, a quick skim registers that there is a reference, which you assume has been checked, and move on. In fact, the authors here don't even feel that a reference is necessary-- everyone knows what a mood stabilizer is. It's too basic to even reference.

So, is there any reason that seizure drugs are "mood stabilizers" (read: prophylactic) while antipsychotics are not? For antipsychotics, is there anything about their pharmacology, half-life, color, or pill size that a priori exclude them from the "mood stabilizer" category while including the seizure meds?

The artificiality of the terminology is confirmed when you actually look at the data: the only drugs listed here which actually are "mood stabilizers" are lithium, olanzapine and aripirazole (over 6 months).

A study may eventually show Depakote is a mood stabilizer after all, but that's not my point. My issue is that in the absence of data or logical necessity, how can we take an arbitrary set of names and make unjustified deductions?

This is the semiotic trap of psychiatry. It doesn't actually matter what the data says (e.g. Depakote is not a mood stabilizer, Zypexa is), what matters is the language, the categories. This isn't science. Just because there are graphs and chi-squareds, doesn't make it science. There's no science here at all. At best it is linguisitics. At worst, propaganda.

I'm not saying they are lying. It's worse than that. It's the structure of psychiatry. It's a subtle manipulation of reality to make people believe what you "already know" to be true. They are trying to convey a perspective, not report a finding. For example, later on the authors try to make the point that higher levels correlate with efficacy, but go too high and you get toxicity:

One analysis noted that serum valproate concentrations between 45-125 ug/ml were associated with efficacy, while serum valproate concentrations > 125 ug/ml were associated with an increased frequency of adverse effects. 19

This isn't what reference 19 says, exactly. What it says is that 45 is a pivot point; below it is not as good as above it. But it doesn't say that higher and higher levels give you better and better efficacy. What makes the omission of this rather important clarification all the more perplexing is that reference 19 was written by the same authors as this article.

But the damage has been done, again. Now you think you have read a statement in support of what you already assumed to be true. So you push the level.

You may argue that I am misinterpreting the author's words, that he never implied that efficacy had a linear relationship with level. Ok: prior to reading this blog, did you think that there was? Where did you learn that? Did you pull it out of the ether? No-- you skimmed articles like these that left you with half-truths, and never questioned it because everyone knows this already.

Let me show you what I mean. Here's the relationship of the Depakote level to maintenance treatment:

Higher serum levels were modestly but significantly correlated with less effective control of manic symptoms in a maintenance study (26). The study therefore supports a somewhat lower serum level range for maintenance treatment than for treatment of mania.

Did you know that? That the efficacy decreases as the level increases? I'm not asking if you believe it, I'm asking if you had ever heard it. Because if the answer is no, then there is something very, very wrong with the way we convey our knowledge. *

-------------------

*Contrary to the opinions of former girlfriends, I am not an idiot. I can plausibly explain this odd finding: the most manic patients got higher and higher doses, so the least responsive ended up getting the highest doses and levels. So it looks like higher levels were associated with decreased efficacy, when really the highest doses went to the sickest people. Ok, good explanation. But this supports my earlier point: you can't take something which requires a post hoc justification and use it to make a leap in logic to conclude something else.

Score: 3 (3 votes cast)

Score: 3 (3 votes cast)

What Political Propaganda Looks Like

This is what a subscription to JAMA gets you:

RESULTS: Among the 201 women in the sample, 86 (43%) experienced a relapse of major depression during pregnancy. Among the 82 women who maintained their medication throughout their pregnancy, 21 (26%) relapsed compared with 44 (68%) of the 65 women who discontinued medication. Women who discontinued medication relapsed significantly more frequently over the course of their pregnancy compared with women who maintained their medication (hazard ratio, 5.0; 95% confidence interval, 2.8-9.1; P<.001). CONCLUSIONS: Pregnancy is not "protective" with respect to risk of relapse of major depression. Women with histories of depression who are euthymic in the context of ongoing antidepressant therapy should be aware of the association of depressive relapse during pregnancy with antidepressant discontinuation.

Read it again. What's the message they are trying to communicate?

The study found that pregnancy is not protective, and stopping your meds during pregnancy raises the risk of relapse. Any other way to interpret this abstract? Am I putting words in their mouths?

I read the entire article, with familiar horror. This was a naturalistic study that followed 201 women with MDD and their medication dosages and saw what happened. That this study had nothing to do with the "protective effect of pregnancy" is right now a secondary issue. The real problem is that the actual study says something very different than the Conclusions:

The study did find that more people relapsed if they stopped their medications. But it also found that more people relapsed if they increased their medications.

Exactly how were you to know this if you only read the abstract?

Don't you think that might have been important? Tthe medication changes themselves are not the cause of the relapse-- how could both stopping them and raising them both be bad?-- but are logically explained as representing something else.

The Conclusions should have read:

Conclusions: Taken together, these findings suggest that pregnant women who are stable (on medication) tend not to relapse, but those who are unstable (and need med changes or who go off them) relapse at higher rates.

The authors do address, slightly, this odd finding-- on the last page. But so what? Only liars read the last page. What makes this misrepresentation so egregious that it is near unforgivable in a journal of JAMA's arbitrary status is that they and we know doctors are not reading these studies from start to finish; for the most part, we skim over the abstracts. So we're going to skim over this abstract, it supports our existing prejudices so we don't give it a second thought, and go on with our deluded lives.

So to write the abstract this way is absolutely volitional, and absolutely misleading. The problem is not with the study, which was excellent, but with the presentation of the findings, which is psychiatric propaganda.

I would demand my subscription to JAMA be cancelled immediately if I had one in the first place.

---

But this isn't really the disturbing part.

What's really sad is that I am, apparently, the only one who noticed this. None of the three Letters To The Editor about this article complained. One of the three letters did complain, but not about the article-- rather about the authors' ties to drug companies. Yes, that again. That's what passes for ccritical thought nowadays. That's now the default moral high ground soundbite of bitter doctors, akin to "the war is just about Halliburton" or some other half-thought deduced from two hours of the Colbert Report and the table of contents of the New York Review of Books.

That's the problem. We're not critical of our fundamental principles. So we attack windmills. We doctors are conditioned (yes, conditioned) to find Pharma bias everywhere, and never to see-- so that we don't have to see-- the real bias in the way we have set up psychiatry. It's the same reason we spend so much time on statistics. Pharma and statistics are witches in The Crucible.

The bias isn't Pharma related. It's much more fundamental. What's at issue here is the approach, the worldview of the authors and psychiatrists everywhere. They are seeking to support the notion that antidepressants work and prevent relapse-- not even because that's what they believe, but because that's what psychiatry is. They are not asking a theoretical question and impartially looking for the truth; they're unconsciously trying to validate their existence. So they see what they want to see, and anything that isn't obviously in support of these postulates, this paradigm, is cursorily dismissed-- or is altered to mean something else. This is important: they're not hiding data, they just interpret it with the only paradigm they have.

Blaming Pharma is easy because it seems obvious-- money buys truth-- but also protects the blamer from needing to perform any actual critical thought, any internal audits of their prejudices. So what if Pharma bought those doctors start to finish? You still need to read the study and figure out how the buying altered the data, if it did. But that would be work.

10/30/06 Addendum: I sent a modified (i.e. nicer) version of this as a Letter to JAMA. It was rejected in less than a day.

Score: 10 (10 votes cast)

Score: 10 (10 votes cast)

STAR*D Augmentation Trial: WRONG!

This is what a $150 subscription to the NEJM gets you:

From the abstract:

Conclusions Augmentation of citalopram with either sustained-release bupropion or buspirone appears to be useful in actual clinical settings.

I can't be the only person who actually reads the articles and not just the titles, can I? There has to be at least one other person?

565 Celexa failures (i.e. did not achieve remission) from the previous STAR*D trial were then randomized to Celexa (avg dose 54mg) + Wellbutrin or Celexa + Buspar. 30 percent of the augmented patients (either Wellbutrin or Buspar) achieved remission.

From this it is concluded "These findings show that augmentation of SSRIs with either agent will result in symptom remission."

How the hell do you conclude that? Is it a mere coincidence that the remission rates of Celexa+Wellbutrin in this group were the same as Celexa alone in the other study (30%)-- and the same as almost every other monotherapy trial for every other antidepressant?

In other words, how can you be sure it was the combination of Celexa+Wellbutrin that got the patients better, and not the Wellbutrin alone? What would have happened if you had given these patients Wellbutrin but taken them off Celexa? They would have done half as well? Are you sure?

I'm not saying that it might not be true that two drugs are better than one, I'm saying that this study doesn't show that. If anything, this study actually supports switching as a strategy (i.e. fail Celexa, so switch to Wellbutrin)-- because two drugs are not proven here to be twice as good as one alone, but I can certainly prove they carry twice as many side effects and are twice as expensive.

Here we have a massive expenditure of tax dollars that will undoubtedly lead to treatment guidelines that will be clinically misleading and economically wasteful. How much did the NIMH pay for this? And for CATIE? I'm not a Pharma apologist, but what was wrong with forcing Pharma to pay for their own studies which we get to pick apart? These government sponsored studies are no better. Gee-- the generic came out on top?

Score: 2 (2 votes cast)

Score: 2 (2 votes cast)

Star-D Study Participants: What's Wrong With These People?

I don't even know what to make of this:

4041 patients show up and consent to be in a massive antidepressant trial, and almost 25% can't even score a HAM-D of 14? (7=complete cure.) Who are these people? What were they thinking?

And then of the ones who actually stay to participate (N=2876), their average HAM-D is 21? For two years?

And Celexa cures a third of these patients? Half of them in less than 6 weeks? After two years walking around HAM-D =21? Cures? Celexa? 40mg? Hello?

Remember, this is open label. These people, who presumably have been in psychiatric treatment for a long time (mean length of illness 15 years), know that they are taking 40mg of Celexa. Not a new experimental drug with a new mechanism of action. Celexa. 1/3rd get cured. After all this time.

BTW, the people who failed this Celexa study get moved into Star-D II. What is the relevance of this? Well, in this study 63% were female, 75% were white, 40% were married, 87% were high school grads or greater, 56% had jobs. It is the opposite of this demographic that is most likely not to have gotten better.

Evaluation of Outcomes with Citalopram for Depression Using Measurement-Based Care in STAR*D.

Score: 0 (0 votes cast)

Score: 0 (0 votes cast)

CATIE Reloaded

And enough with the notion that medication compliance is a good proxy for overall efficacy.

All of these horrible psychiatry studies-- CATIE, Lamictal and Depakote maintenance trials, etc-- keep telling us how long patients stay on medications, because they say this means the drugs are working. The authors think that if a drug is working, they patient will stay on it. But you would think this only if you didn't actually treat many patients. I can make a similar argument that staying on a medication is inversely related to efficacy-- because when a patient feels better, they simply stop taking their meds.

Think about antibiotics. People don't finish the full 14 day course, precisely because they feel well. If they felt sick, they would probably take them longer than 14 days. In fact, people overuse these antibiotics even when its a virus, despite the antibiotic having no efficacy at all. They will demand an antibiotic even though know that it shouldn't be doing anything.

Same with pain meds. Oh, that's an acute problem? How about the chronic problems of diabetes and hypertension. People will skip/miss/forget doses when they feel asymptomatic, and will be more compliant when they have symptoms associated with these illnesses (e.g. headache, dizziness, etc.)

Look, I'm not telling you that compliance and efficacy aren't related. I am saying that if you want to measure efficacy, don't use compliance as a proxy-- go measure actual efficacy. And don't tell me it's too hard. You got $67 million for this study. Find a way.

Score: 6 (6 votes cast)

Score: 6 (6 votes cast)

CATIE: Sigh

1. You know, if you're going to be rigorous about BID dosing schedules because the FDA requires it, why so liberal with total dosing for Zyprexa? A mean dose of Zyprexa is 20.8 is way (150%) above FDA guidelines. For comparison, that would have meant dosing Geodon at 240mg, Seroquel at 1000mg, and Risperdal at 6mg. BTW: a mean of 20.8mg means that a lot of people were dosed with MORE than 20.8mg (max=30mg).

2. The miracle here isn't that Zyprexa won, but that Zyprexa 20mg barely won against Geodon 114mg.

3. Why Trilafon (perphenazine)? Originally you thought all conventionals were the same; so why not Haldol? Or Mellaril? You say it's because it had lower rates of EPS and TD, which is fine, but then why exclude TD patients from that arm?

4. So you excluded patients with tardive dyskinesia from the perphenazine group (fine) but then had the nerve to say people tolerated it as well as other meds? Do you think maybe people who have TD may have different tolerances to meds? Different EPS? Different max doses? That they're just different?

5. You can't generalize from an obviously slanted "typical" arm to all other typicals. If you chose Trilafon over Haldol because of better tolerability a priori, you can't now say that "typicals" have equal tolerability to atypicals. Why not pick two typicals of differing potencies (like Mellaril and Haldol) and infer from there?

6. Do you actually believe-- does anyone believe-- that any of these patients are compliant with BID regimens? Especially with sedating meds like Seroquel?

The secret to understanding CATIE 2 is to understand that there are two CATIE 2s.

CATIE2-Efficacy: People who dropped out of CATIE 1 because their med didn't work were randomized to Clozail, Zyprexa, Risperdal or Seroquel. On average, new Clozaril switches stayed on 10 months, everyone else only 3. 44% of Clozaril stayed on for the whole 18 month study; only 18% of the others completed the study.

CATIE2-Tolerability: People who dropped out of CATIE 1 because of side effects (not efficacy) were randomized to Zyprexza, Risperdal, Seroquel, and Geodon (not Clozaril.) Risperdal patients stayed on for 7 months, Zyprexa for 6, Seroquel for 4 and Geodon for 3.

CATIE2-Efficacy is fair. If you fail a drug, you're likely to do better on Clozaril than anything else.

CATIE2-Tolerabilty makes no sense at all. The reason Geodon was used is because it has "very different" side effects. Hmm. How? "In particular, ziprasidone [Geodon] was known not to cause weight gain." But this assumes that the intolerability of the first antipsychotic was its weight gain.

Most importantly is this: if a patient couldn't tolerate their first antipsychotic, how likely is it that it was effective? In other words, if it wasn't tolerable, it wasn't efficacious-- these patients could have been in CATIE2-Effectiveness study. So how did they choose?

Easy: they gave the patient the choice: Geodon or Clozaril? Out of 1052, half left altogether. 99 went into the Clozaril study (CATIE2-Effectiveness) and 444 went into Geodon (CATIE2-Tolerability.) Of the 444 in the Tolerability trial, 41% were actually labeled first drug non-responders. 38% were labeled as not tolerating their first drug, but of those, who knows how many were also nonresponders?

And 74% dropped out again.

If you take the 444 in the Tolerability study and divide them into two groups:

- those who left CATIE1 because of lack of efficacy: then switching to Zyprexa or Risperdal kept them on their meds longer. (Which makes no sense again: this is the same thing as the CATIE2-Effectiveness, where (except for Clozaril) there was no difference between Seroquel, Zyprexa and Risperdal.)

- those who left CATIE 1 because of lack of tolerability, then it made no difference what you switched to.

Sigh.

And what's with the blinding? In every other study with a clozapine arm, you equalize the weekly blood draws by making everyone have to submit to them. But in this case, they unblinded clozapine so as not to have to subject all these people to blood sticks. Except they were subjecting them already-- they were checking blood levels.

And where was perphenazine? "[CATIE1] did not anticipate this unexpected result [that perphenazine would be as efficacious] that challenged the widely accepted (but never proven) belief that the newer atypical antipsychotic medications are better than all older antipsychotic medications" and so was not considered for CATIE2. Apart from the fact that it is simply untrue that anyone thought the atypicals were more efficacious than the typicals, it is furthermore untrue that that the authors did not "anticipate this unexpected result." In 2003, after basically doing Medline meta-analysis, they found that "not all of them were substantially different from conventionals such as perphenazine."

What's funny about these guys is how they conveniently lump all typicals together but arguing for differential effects of individual atypicals; then argue typicals are different from each other to justify picking Trilafon; and then say atypicals are different from each other ("not all of them were different") but typicals are all pretty much the same ("conventionals such as perphenazine.")

Bottom line:

The stated purpose of CATIE2 was to help clinicians decide which drug to switch to if patients a) failed their first drug; b) couldn't tolerate their first drug.

The divorce rate in America is 40-50%. Say you get divorced, and a friend says, I have two women for you, Jane and Mary. If the problem with your first wife was that she didn't turn you on, you should marry Jane. If the problem with your ex was that she was annoying, you should marry Mary.

What's going to happen here is that your second marriage, to either girl, is doomed. Certainly more than the national average of 50%. How long is it going to take before your second wife doesn't turn you on either? How long before you find stuff intolerable about her? The answer is, more likely than your first marriage-- say, 75%-- because the problem isn't your wives, it's you. You've framed the question in an idiotic and arbitrary manner. You don't get married to get turned on OR to be with someone who isn't annoying. You want the marriage to have both simultaneously, and much more. These things are not separable. This is CATIE2. A meaningless dichotomy-- efficacy and tolerability are not separate, let alone opposites-- used to create a false paradigm of medication selection.

Score: 3 (5 votes cast)

Score: 3 (5 votes cast)

Is Schizophrenia Really Bipolar Disorder?

The answer should be so obvious that there shouldn't be an article on it. But there it is.

Lake and Hurwitz, in Current Psychiatry, conclude that schizophrenia is really a subset of bipolar disorder.

The author's initial volley is (sentence 3):

The literature, including recent genetic data (1-6) marshals a persuasive argument that patients diagnosed with schizophrenia usually suffer from a psychotic bipolar disorder.

Well that's a pretty powerful assertion, supported by 6 different references. Except for one thing: none of the six references actually support that statement.

- Berrettini: finds that of the various regions of the genome connected to bipolar disorder in genome scans, two are also found in scans of schizophrenia. Regions that overlap-- not genes, or collections of genes, but entire chunks of chromosomes. He says there are (perhaps) shared genetic susceptibilities, not that they are the same disease.

- Belmaker: A review article. No new data.

- Pope: specificity of the schizophrenic diagnosis-- written in 1978.

- Lake and Hurwitz: says there's no such thing as schizoaffective disorder, which would be groundbreaking stuff if it weren't written by the same author as this article.

- Post: Review article talking about kindling in affective disorders. In 1992.

- DSM-IV. Seriously.

I'm game if you are: find the "persuasive argument" that these references "marshal" and then we have something to talk about. What makes all this so hard to fathom is not the movement to lump the two disorders together, but rather to lump them together under the more arbitrary, heuristic diagnosis. It is schizophrenia, not bipolar, that actually has physical pathology. Let's review:

Brain anatomical findings:

- white-gray matter volumes decreased in caudate, putamen and nucleus accumbens. 1

- deficits in the left superior temporal gyrus and the left medial temporal lobe.2 3

- moderate volume reduction in the left mediodorsal thalamic nucleus (but total number of neurons and density of neurons is about the same) 4

- reduced gray matter volume, reduced frontotemporal volume, and increased volume of CSF in venticles 5 and 1

Physical features:

- Larger skull base and larger lower lip 1

- Velo-cardio-facial syndrome (22q11 deletion) 2

- vertical elongation of the face 3

- high arched palate 4

Granted, these aren't great; but try to find anything like this for bipolar.

Clearly, the Kool-Aid is delicious because they want us to drink it, too.

In the final section, magnificently entitled "What is standard of care?" the authors pronounce:

Antidepressants appear to be contraindicated, even in psychotic bipolar depressed patients.14,15 We suggest that you taper and discontinue the initial antipsychotic when psychotic symptoms resolve.

Which is great, except it's not true. If the authors have some evidence that antidepressants actually increase the switch rate, I'd love to see it: but for sure references 14 and 15 aren't it. At least, could "contraindicated" be a tad overstated?

The last two sentences of the whole article:

The idea that “symptoms should be treated, not the diagnosis” is inaccurate and provides substandard care. When psychotic symptoms overwhelm and obscure bipolar symptoms, giving only antipsychotics is beyond standard of care.

No references given for these outrageous statements, but given the relevance of their previous references I guess it really doesn't matter. "Substandard care?" "Beyond the standard of care?" Really? I'll see you in court.

Score: 5 (5 votes cast)

Score: 5 (5 votes cast)

For more articles check out the Archives Web page ››